Human Factors and Ethic's Canvas (HFEC)

Introduction

Personalised technology and intelligent/automated systems have now become part of our personal and working lives. Such technologies often involve a range of sensors (wearable, environmental and implantable) and novel artificial intelligence (AI) and machine learning (ML) components. Future technology is shaping (and will shape) our political, social and moral existence. The use and potential impact of these technologies raise many interrelated questions pertaining to ethics, law and social acceptability. In relation to ethics, these new technologies (including innovative AI & ML components) raise macro ethics questions concerning (1) the intended use and purpose of technology, (2) the role of the person, (3) the impact of these technologies on our behaviour and activities (including potentially negative consequences), and (4) societal values.

The beneficent uses of technology have a way of being co-opted for other purposes. The application of automation and advanced machine learning technologies changes the role of the person in the system. Often there can be a gap between the intended use of a system and its use in terms of what is implemented. Further, the social effects of certain technologies are not always apparent. For example, the ways AI could affect social relationships and connections. Designers, consumers and the community have responsibilities for the technologies that are created (Adamson, 2013). Ideally, new technologies should (a) enhance lived experience (b) protect human rights (for example, dignity, privacy, social interaction), (c) ensure human benefit and (d) prioritize human wellbeing.

Ethics & New Technology

Ethics issues are now being formally explored in commercial and research projects (European Commission, 2013). In parallel, both ethics canvases (Adapt, 2018) and data ethics canvases (ODI, 2019) are being used in technology projects. Much of the focus is on ethical issues related to data privacy and data quality (O’ Keefe & O’ Brein, 2018). There is some focus on the psycho-social dimensions of new technologies, their impact on behaviour and activities, and risks/safeguards in relation to the use of AI and ML technologies which make decisions impacting on human wellbeing and rights. However, there is limited integration with human factors themes and methodologies.

Human Factors & Ethics Canvas

Overall, we need methodologies to support the production and documentation of evidence in relation to addressing the human and ethical dimensions of future technologies. The responsibilities of designers and questions concerning the moral quality of technology belong to the field of Applied Ethics. However, they also belong to the field of Human Factors.

The objective of the ‘human factors and ethics’ canvas, is to create an evidence map in relation to the specification of an ethically responsible technology solution that properly addresses relevant human and ethical issues. The ‘Human Factors and Ethics (HFAE) Canvas’ enables the active translation of ethical issues pertaining to the human and social dimensions of new technologies into ethically responsible solutions. The HFAE Canvas was developed across three human factors projects.

At the Centre of Innovative Human Systems (CIHS) we would like to promote engagement with issues around ethics and user acceptability. There is a need for honest conversations about the purpose of new technologies and their impact (and specifically, known and unknown consequences).

Evidence, HF Methods and the Production of a HFAE Canvas

The objective of the human factors and ethics canvas is to create an evidence map in relation to the specification of an ethically responsible technology solution, that properly addresses human and ethical issues.

The ethics canvas reflects an integration of ethics and HF methods, particularly around the collection of evidence using stakeholder evaluation methods. Specifically, the ethics canvas makes use of the data gathered and analysed in from literature and stakeholder evaluation methods (i.e. interviews, observations and participatory design/evaluation sessions).

A key dimension of the human factors and ethics canvas is the application of personae-based design and scenario-based design approaches. The application of a personae/scenario-based design approach and integration within an ethics canvas allows us to consider the human and ethical dimensions of these technologies.

Key performance indicators (KPIs) relevant to the potential success of this technology once it is introduced and used by the public (including psychosocial dimensions) are also specified in the ethics canvas. Importantly, the translation of system objectives into set of objectives spanning key themes (for example, wellbeing, human benefit, social interaction and relationships and societal values and norms) and associated metrics, ensures that wellbeing, human benefit and values are both a reference point and a design outcome. Further, potential failures, potential negative impacts and unknowns are also defined.

Questions pertaining to data ethics and ethics in data analytics must be asked.

Further, ethics happens and is addressed in implementation. As such, we must locate the technology from a ‘socio-technical’ perspective. Technology is one of many system dimensions to be accounted for. The HFAE Canvas calls for a holistic solution to ethical issues (i.e. technology, task design, environment, process, culture and so forth).

The human factors and ethics canvas makes use of ethical theories/perspectives that are used in relation to the analysis of technology innovation in relation to the analysis of benefit versus harm (i.e. Consequentialism, Deontology & Principlism). Principles need to be articulated and embedded in the design concept. Human factors methods are useful here in relation to considering the needs/perspectives of different stakeholders and adjudicating between conflicting goals/principles. In this way, the solution needs to carefully balance goals and issues pertaining to human benefit for different stakeholders.

About the HFAE and Stages in the HFAE

Introduction

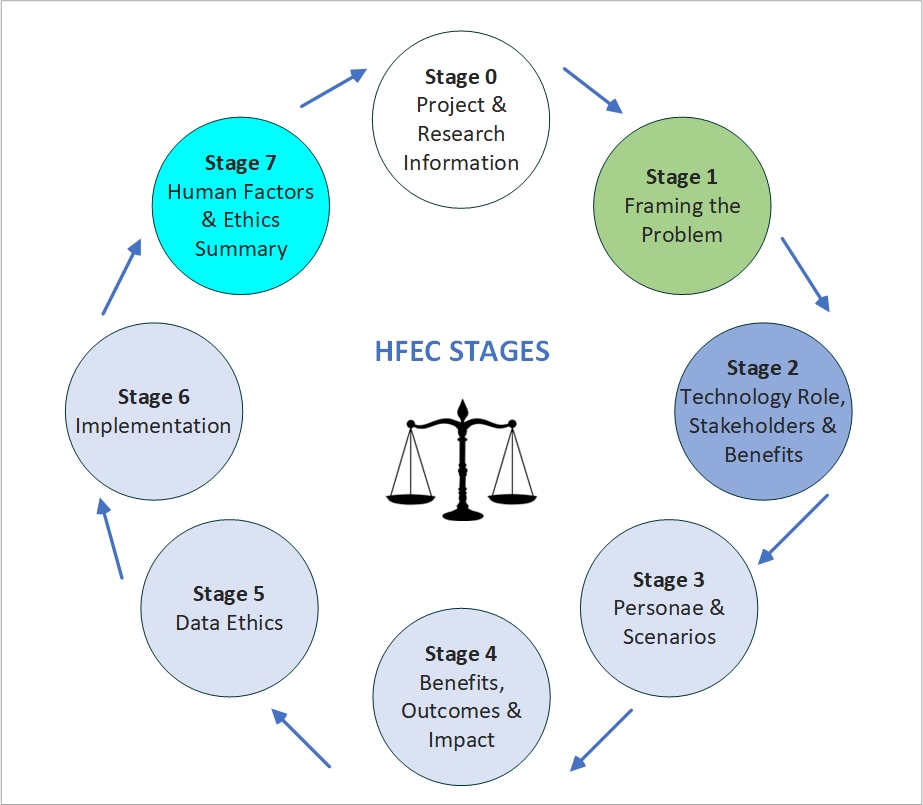

There are different stages in the HFAE Canvas. All stages are important. It is not possible to get to the last stage (i.e. the human factors & ethics summary), without progressing through the other stages.

Depending on project scope and timing, you may not have scope for some of the ‘deep-dives’. Deep dives are grouped thematically

- Benefits, outcomes and impact (Section 3)

- Personae & Scenario (Section 4)

- Data Ethics (Section 5)

- Implementation (Section 6)

In line with stakeholder evaluation approaches, the canvas can be evaluated using the ‘community of practice’ – i.e. internal stakeholders (project team) and external stakeholders (relevant ends users/stakeholders and legitimate other parties who may be impacted by the technology). At a minimum, core internal stakeholders/core team members (including an ethicist {if available}, the HF lead, the design lead and the product owner/manager) are involved in completing the canvas.

Stage 0

Stage 0 records relevant project information including who is responsible for co-ordinating the HFEC inputs. Critically, it captures the time/point in the project when the HFEC (i.e. stage in the research and innovation process) was documented. Ideally, there are several iterations of the HFEC as the project progresses. Key sources of research/evidence on which the HFEC inputs are based are also documented, along with research ethics (i.e. methodologies) information.

Stage 1

Stage 1 is all about framing the problem. Values and human and ethical issues are built into how we frame the problem (and set the design brief for new technologies).

- Are you thinking about the problem in the right way and posing the right question?

- To do this, you need to understand the problem correctly - behaviour/symptoms, contributory factors and outcomes/consequences

Stage 2:

Stage 2 involves understanding how the technology fits to the problem, and specifically what we know about stakeholder goals and needs. A key component of this is the specification of expected benefits for different stakeholders.

Stage 3

Stage 3 is a deep dive into benefits, outcomes and impact.

Stage 4.

Stage 4 involves a deep dive in relation to personae and scenario.

Stage 5.

Stage 5 focuses on data ethics. The specific questions posed reflect a simplification of what is covered in the ‘Data Ethics Canvas’ (ODI, 2019).

Stage 6.

Stage 6 concerns technology implementation. As noted previously, ethics both happens and is addressed in implementation. The questions posed follow from a ‘system approach’ to human factors. Technology is one of many system dimensions to be accounted for. The HFAE Canvas calls for a holistic solution to ethical issues (i.e. technology, task design, environment, process, culture and so forth).

Stage 7.

The final stage presents the outcomes of the preceding analysis.

White Paper & Example 'Human Factors & Ethics Canvas

The Specification of a ‘Human Factors and Ethics’ Canvas for Socio-technical Systems.

Human Factors and Ethics Canvas

Podcasts

Episode 1: Dr Joan Cahill: The Ethical Challenges of AI

For Episode #5 of the One Zero One podcast, Ken MacMahon, Head of Technology and Innovation at Version 1 explores the increasingly pertinent topic of Ethical AI and human-centric design with Dr. Joan Cahill, Principal Investigator/Research Fellow at the Centre for Innovative Human Systems (CIHS), Trinity College Dublin, Ireland.

In this podcast, Joan gives listeners a view into Human Factors, an interdisciplinary area of psychology, providing us with an understanding of Ethical AI considerations. Joan illustrates how technology makers have the power to positively and negatively impact users of new technologies and processes, and that this is an increasingly important responsibility for technologists to consider. From highlighting the potential of machine learning and AI for senior drivers using autonomous vehicles, to looking at how technology can be harnessed to positively impact patients in healthcare if stakeholders’ motivations and outcomes are appropriately considered, Joan shares some very interesting topics throughout this episode.

Listen or download this episode to hear more from Joan Cahill regarding Ethical AI, the importance of stakeholder-based research in design, and why it is so critical to have multi-disciplinary teams in the room when designing technology from the outset.

Episode 2: Ethics in Data Science - Expert Panel: The Rise of the Algorithm and the Implications for Ethics in Data Science

For Episode #8 of the One Zero One podcast, Version 1, in association with the Analytics Institute, brought together perspectives from academia, media, and industry to discuss the challenges of responsible data science in the age of the algorithm. This podcast was recorded live during the interactive event with live polling and Q&A technology.

The role of Data Science in today’s technology-driven world impacts a range of industries from the health sector to financial services, to developing smart cities and transportation.

Although the benefits of Data Science have become seemingly clear and its impact noted across said environments, the dangers of data science without ethical considerations are equally apparent — whether it be the protection of personal data, the bias in automated decision-making, the apparent elimination of free choice in psychographics or the social impacts of automation.

Ronan Laffan, Head of Advisory Services, Version 1 hosted this episode, the expert panel for the discussion featured:

- Dr. Joan Cahill, Centre for Innovative Human Systems, Trinity College Dublin

- Dr. Katherine O’Keefe, Chief Ethicist, Castlebridge

- Niall O’Neil, Chief Strategy Officer, OneView Healthcare

- Garvan Callan, Financial Services Transformation Advisor, OneZero1

Listen or download this episode to hear an in-depth discussion around Data Ethics, and what our panel feel is of importance for businesses, professionals and individuals to consider when it comes to utilising emerging technologies.

Download Publications and Presentations

Introduction presentation about the Human Factors and Ethics Canvas presentation here.

Cahill, J., Cromie, S., Crowley, K, Kay, A., & Gormley M.

Embedding Ethics in Human Factors Design & Evaluation Methodologies.

Panel Presentation at HCI International 2020.Copenhagen, Denmark, 19 to 24 July, 2020.

Cahill, J., Cromie, S., Crowley, K, Kay, A., Gormley M, Kenny, E., Hermman, S, Doyle, C, Hever, A and Ross, R (2020). Ethical Issues in the New Digital Era: The Case of Assisting Driving. Book Chapter in ‘Ethics, Laws, and Policies for Privacy, Security, and Liability’.

Intech Publishing. DOI: 10.5772/intechopen.88371.

https://www.intechopen.com/online-first/ethical-issues-in-the-new-digital-era-the-case-of-assisting-driving

Cahill, J., Cromie, S., Crowley, K, Kay, A., & Gormley M. Advancing an ‘Ethics Canvas’ for New Driver Assistance Technologies targeted at Older Adults – embedding ethics in human factors design methodologies.

Paper to be Presented at HCI International 2020. Copenhagen, Denmark,

19 to 24 July, 2020.

Cahill, J. McLoughlin, S., O Neil, N. & Wetheral, S. Ambient assisted living and ethical issues pertaining to patient and care monitoring. (In Press). “Assisted Living: Current Issues and Challenges. Nova Sciences Publishers.

Contacts

For more information, please contact

- Dr Joan Cahill: cahilljo@tcd.ie