Transformer Load Minimisation in

Smart-Grids using Transfer Learning

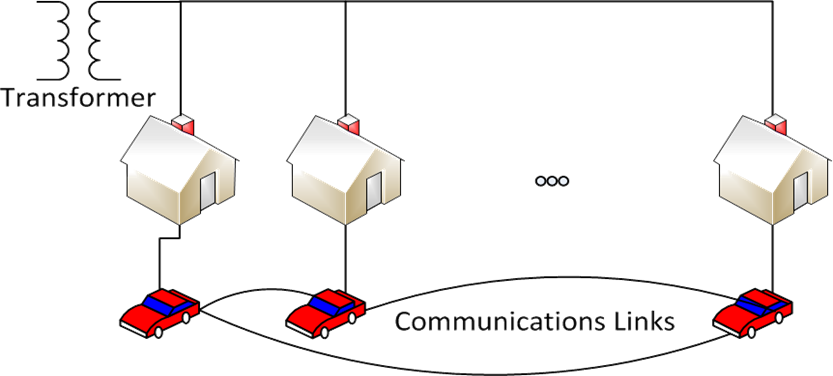

Controlling a system as complicated as the smart-grid can not be done centrally, as it can consist of hundreds of thousands of devices. Devices must be able to make decisions locally based on the information available to them. Over time they can learn how successive decisions can help them achieve their aims. Each of these devices must meet its own goals (e.g. an Electric Vehicle (EV) must be charged by morning) but it must also satisfy goals of the overall grid. In accomplishing the grid's objective, significant improvements in operational efficiency can be achieved. For example, if the grid tells individual devices that there is a surplus of energy --perhaps because it is a particularly windy day--, they can operate earlier than they otherwise would have, this means the surplus can be used rather than being stored or discarded as would otherwise have been the case.

In much of our work on energy demand management, we use Multi Agent Systems (MAS). In this approach each device is controlled by an agent (a decision making entity). By representing each device as an agent, they can form teams and learn better ways to reach their goals than they could on their own. However, learning in MAS takes a significant amount of time due to the dimensionality of the problem. This work investigates a Transfer Learning based approach with the aim of reducing the learning time.

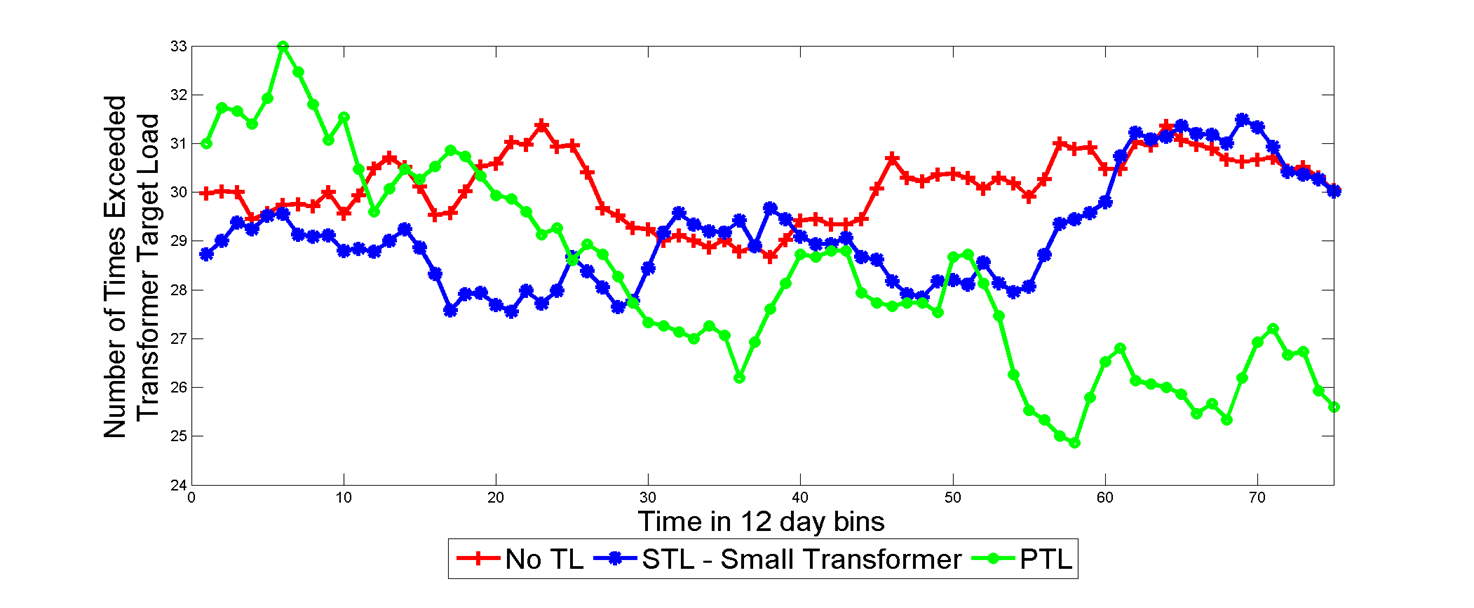

EVs place significant load on transformers as they tend to use the same charging pattern, e.g., charging when arriving from work or charging at midnight. Each device has similar goal (e.g., minimize the cost of charging), so there is scope to share knowledge and thereby minimise the learning time. Reductions in learning time lead to increases in performance, in this case, smoother loading of the transformer.

Transfer Learning (TL)

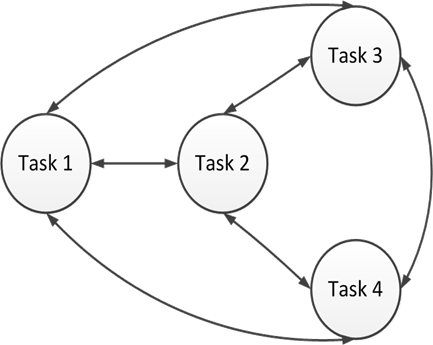

In TL, a process gets information from a source agent, translates it, and transfers it to a target agent. This is done off-line from learning.

Parallel Transfer Learning (PTL)

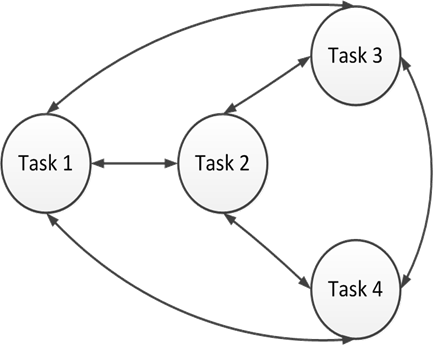

In PTL, any process can function as a source, target or both at any one time. Agents transfer information at runtime, rather than off-line as in traditional Transfer Learning. Information is transferred only when it is deemed ready to share by the source task.

Fig 1. Transfer learning Fig 2. Parallel transfer learning

PTL Algorithm

Source Agent

Select target agent from set of neighbours

Select state-action pair to transfer

Translate pair via inter-task mapping

Send to target

Target Agent

While (information to process)

Get local state-action pair

If(not visited) accept neighbour's pair

Else decide whether information is more

accurate

If so accept it and merge it with local information