Spatial Audio: New Frontiers in Virtual Reality

with

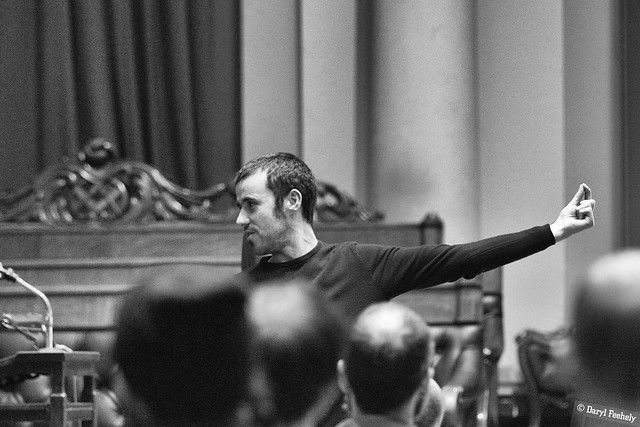

Professor Frank Boland and Dr Enda Bates of the School of Electronic and Electrical Engineering,

Trinity College Dublin

Article by Dr Kate Smyth, Consultancy Officer, Trinity Research and Innovation

When we go to the cinema or play video games, we often focus on what we see. We are impressed with the quality of special effects on screen or the way the characters have the capacity to interact with their surroundings in a game. What we do not tend to focus on is how the audio that accompanies these visuals has a huge impact on our engagement with the experience.

According to Dr Enda Bates and Professor Frank Boland, who are both electronic and electrical engineers and academics at Trinity College Dublin, audio tends to be “an afterthought” in films or games. But Dr Bates draws on the words of George Lucas, who claimed that “sound is half the experience.” Bates makes the point that if the audio is substandard, people will notice something is wrong. It is the combination of the use of visuals and sound that creates a realistic and engaging experience for the user.

At Trinity College Dublin, in 2008, Professor Boland formed a Spatial Audio Research Group to explore how to provide lifelike perceptions of sound to accompany the visual experience. Boland’s expertise is in the mathematics and algorithms of adaptive systems and digital signal processing for audio and acoustics. After working in this area for years, in October 2013, funded by Enterprise Ireland, Boland began a project to develop a technology for delivering solutions to audio problems, particularly while using headphones. This work produced THRIVE, a 3D audio system for gaming applications using virtual reality headsets to provide the user with an immersive experience.

Virtual reality refers to the creation of a 360-degree environment, transmitted through a headset. The user can move their head but not their body, and the virtual environment blocks out the real world. “The challenge that we were looking at,” Boland says, “was that when you put headphones on, you get this internalisation of audio. Your perception of audio is that it’s between your ears, in your head.” His research focused on the delivery of audio using headphones, “so that it appears to arrive from where the source is on the screen” and the user can “associate the sound with cues on the screen.” Dr Bates then joined Boland’s group in 2015, bringing his expertise in spatial audio and music to the table.

Their goal was to resolve some of the issues that make the audio seem inaccurate or unrealistic, which disrupts the user’s immersive experience. In virtual reality environments, the sound depends on the position of the listener’s head. If the head moves, and the audio seems to move with it, which is what regularly happens when listening through headphones. The user’s experience is less realistic as a result, and the sound (such as a car alarm or someone speaking) no longer appears to originate from its source onscreen. THRIVE was designed to gather data that could be used to track the user’s head movement, allowing for the development of audio that matches the way a user moves when interacting with a virtual environment. This patented technology was licensed to Google in 2014 and was released as Resonance Audio in March 2018, which is impressively described on its website as “a technology that simulates how sound waves interact with human ears and their environment.”

According to Boland, “in the past five years there have been extraordinarily rapid developments in technology for generating visual experiences that immerse a user in a virtual world or provide views of the real world that are augmented by synthesised material to create a mixed reality.” Pokémon Go is a recognisable example. But there is a sense that we are on the verge of more complex things. The slick website for virtual and augmented reality device, Magic Leap, boasts of a “lightweight, wearable device that brings the digital world seamlessly into the physical one.”

To the uninitiated, like myself, this kind of technological development seems reminiscent of Black Mirror or the novels of JG Ballard. But there is a myriad of possibilities here. This kind of technology could be applied not only in entertainment, but also in areas like education and healthcare (for example, doctors could devise the best plans for surgery in using augmented reality headphones). It could also be employed by businesses and companies selling products. An example Bates gives is that if a consumer wanted to see what a new lamp would look like in their sitting room, they could hold up a smart-phone and, through it, see a real-time implementation of what that lamp would look like on the coffee table.

But let’s bring it back to the technology’s entertainment value. Boland says that “engineering a solution to this challenge is important as otherwise there would be a dissonance in the audio-visual experience.” This would pull the user out of the experience. According to Bates, for the creation of such a believable experience, “it is ultimately sound that provides a sense of immersion within a scene, whether it’s the real world around us, or indeed a virtual one created using a head-mounted display.”

Another important factor is what Bates describes as “spatial sound”. If a story is being told within a virtual or augmented reality game, how do you guide what the user is looking at? How can you direct their attention, for the purposes of the story? Just as in real life, you do this using spatial sound: sound coming from a specific direction. This kind of spatial sound is sometimes compared to surround-sound in the cinema. But re-creating an accurate and realistic experience of spatial sound through headphones is the challenge being tackled by Boland and Bates.

This research also incorporates what Bates calls psychoacoustics, which involves an understanding of human cognition and depth perception with regard to hearing and sound. Everyone’s ears are unique, so it is difficult to design a technology that will solve these problems and be translatable across users. In addition, the accurate placement of sounds at different distances depends significantly on the particular acoustics of the environment. One particular challenge for augmented reality, therefore, is that the application must dynamically process the sound in a way that matches the local acoustic environment, whether that is an office, a city street, or a cathedral.

Without such accuracy, the user is immediately reminded that their experience is not real. This is where artificial intelligence may be a factor, where the technology could potentially learn to adapt to a given context or requirement, a technology that could be context-aware, so that if the virtual space changes as the person is walking through it, the sound changes accordingly.

Bates and Boland feel that the ongoing technological developments in virtual and augmented reality technology are a good impetuous for solving these issues relating to sound and human cognition, and are inspired by the explorative possibilities that lie ahead.

July 2019